Recently an idea had crossed my radar around speeding up performance of messaging producers; batching messages into a single transaction. The idea being that there is overhead in the standard behaviour of a message bus that can be optimised out if you group messages into a single transaction.

My initial thoughts about this were that it was unlikely; at best the performance would be the same as an untransacted client. The comments were framed in conversations of Spring’s JMSTemplate, which continually walks the object tree ConnectionFactory -> Connection -> Session -> MessageProducer, and back closing them off. Each close causes some network traffic between the library and the bus. My thoughts were that perhaps the discussion hadn’t accounted for caching these objects via a CachingConnectionFactory. I resolved to find out.

My other concern was that while it could be done in the general case, it didn’t make a lot of sense.

Generally speaking there are two categories of messages that will concern an application developer. Sending throwaway messages that aren’t critical is one thing – you can not persist them on the broker side and save 90% of the overhead. You can also do asynchronous sends, which won’t persist the messages and save further time by not giving your app confirmation that the broker received them. If you lose them, it won’t be a big deal.

The other type are the ones that you really care about. Things that reflect or affect a change in the real world – orders, instructions, etc. The value of a broker in this case comes from knowing that once it has the message, it will be dealt with.

In ActiveMQ, this guarantee comes via the message store. When you send a message the broker will not acknowledge receipt until the message has been persisted. If the broker goes down, it can be restarted, and any persisted messages may be consumed as though nothing happened, or another broker takes over transparently using the same message store (HA). As you can imagine, there is natural overhead. Two network traversals, and some I/O to disk. Keep this in mind.

I tested a couple of different scenarios.

JMSTemplatewith aCachingConnectionFactory. This is the baseline.- An untransacted, persistent session. Each message gets sent off to the broker as they come in. The broker places the message in a persistent store. This is like the logic of the JMSTemplate, but where you control the lifecycles of JMS objects.

- An untransacted, unpersisted session. Each message gets sent off to the broker as they come in. The broker stores the message in memory only for delivery.

- Transacted sessions. Messages get bundled together into a transaction and committed.

Messages were posted to a queue with no consumers on a single broker with default setttings.

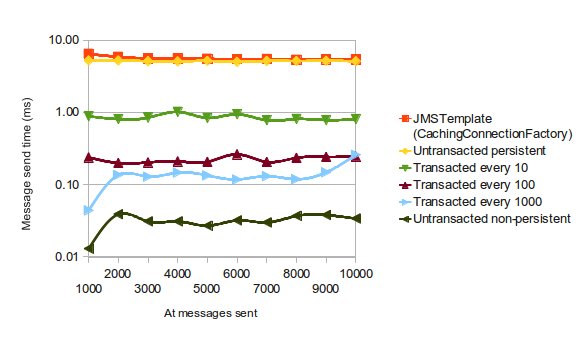

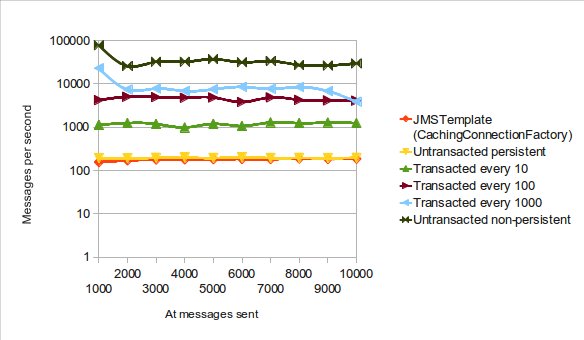

The results surprised me.

Timings should be considered indicative only, and are only there for a relative comparison. Initial spikes should be considered part of a warm-up, and ignored.

Firstly, getting down to the JMS API was consistently faster than with JMSTemplate (not surprising in hindsight given its object walk). There was also indeed an upshot in performance from batching messages together for delivery in a transaction. The more messages there were in a batch, the better the performance (I repeated this with 10, 100 and 1000 messages per transaction). The results approached those of the non-persistent delivery mode, but never quite reached it. What accounts for this?

I did some digging, and came up with this description of transactions in ActiveMQ. It appears that there is a separate store in memory for uncommitted transactions. While a transaction is open messages are placed here, and are then copied over to the main store on commit.

So what to make of this? Should you forgo JMSTemplate and batch everything? Well, as with pretty much anything, it depends.

JMSTemplate is simple to configure and use – it also ties in nicely to the various Spring abstractions (TransactionManagers come to mind). There are certainly some upsides to getting down to the JMS API, however they come at a cost of time coding and losing those hooks; which you might regret later.

Batching itself is a funny one, in that it’s one of those things that could be easily misused. Transactions are not there for improving performance. They exist as a logical construct for making atomic changes. If you batch up messages in memory to send to the broker for a performance upshot that are unrelated; and your container goes down before you’ve had a chance to commit; you have violated the contract of “this business action has been completed”, and you lost your messages. Not something you want for important, change-the-world messages.

If you have messages that are relatively unimportant that won’t affect the correct operation of your system, there are better options available to you for improving performance such as dropping persistence.

If, however, you have related messages tied to a single operation, then perhaps getting down to the JMS API and batching these together under a single transaction is something you might want to consider. The key here is ensuring that you don’t lose the real meaning of transaction.

Update 1 (13/11): Replaced graphs with log scale to make the series clearer.

Update 2 (13/11):Thanks to James Strachan for an extended explanation:

The reason transactions are so much faster on the client site can be explained comparing it to the normal case, where the client blocks until the message has definitely gone to disk on the broker.

In a transaction the JMS client doesn’t need to wait until the broker confirms the message because it’s going straight into the transactional store (memory). Because the broker will complain on a commit if something has gone awry, the client can afford to not block at all doing a send or acknowledge (not even blocking for it to be sent over the socket), so it behaves like a true async operation. This means it’s very fast.

This explains why transactional performance approaches that of asych operations with the size of the batch – because for all intents and purposes it is nearly asynchronous. The only cost is that of the synchronous commit(), which is a flush from the transactional store to the journal.

You could argue that this doesn’t really affect the actual throughput – as the difference between batching & no transactions is just the client sitting around blocking. The benefit of the transactions is that it’s making each client much more effective. I.e. to get equivalent throughput you would need more clients running concurrently. In the case of the Camel, this could be achieved by configuring in a larger thread pool.

Comments

One response to “Batching JMS messages for performance; not so fast”

Nice article. Thanks